How to Create and Use Your Own Custom Dataset in TensorFlow

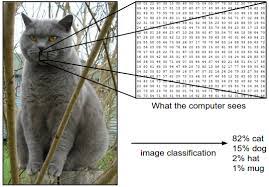

TensorFlow is a powerful open-source machine learning library that allows users to create and train custom datasets. This can be helpful for projects where you need to create a dataset that is specific to your needs, or where you want to try out new machine learning techniques without having to rely on pre-existing data sets. In this article, we will show you how to create your own custom dataset in TensorFlow, and how to use it for image classification.

To begin, we first need a dataset. Luckily, there are many publicly available datasets that can be used for this purpose. One such dataset is the MNIST training set, which consists of 60,000 handwritten digits (0-9). Next, we need to import the necessary libraries into our TensorFlow session. We’ll need the TensorFlow module for loading data files (.ipynb), as well as theTFDataset class for creating custom datasets.

Next, we’ll initialize our TFDataset object by providing the filename of our MNIST training set file as input. We also specify the number of rows and columns in our training set (20×30), as well as the number of features per row (5). Finally, we provide an input parameter specifying whether our dataset should be encoded using UTF-8 or not.

Once our TFDataset object has been initialized, we can start training our models! To do this, we first require a model definition

imagebackground react native

How to Create and Use Your Own Custom Dataset in TensorFlow

In this tutorial, we will show you how to create and use your own custom dataset in TensorFlow. This is a powerful tool that can be used to train deep learning models more effectively.

Datasets are a crucial part of deep learning, as they provide the training data that helps the AI learn how to recognise patterns and make predictions. By creating your own dataset, you can ensure that the data is accurate and representative of the problem you are trying to solve.

There are a few different ways to create a dataset in TensorFlow. In this tutorial, we will use the tf.data module. First, we will create atf files that contain the training data. Next, we will convert these files into TFRecord data structures so that we can use them with the TensorFlow API. Finally, we will train a neural network on this dataset and evaluate its performance.

To start, let’s create our training data files. We will use two files for this tutorial: one for images and one for text documents. We will first generate random numbers between 0 and 1 using tf.random . This will create our training images and text documents. To generate our training images, we will use tf.image . This function takes two arguments: the input shape ( width , height ) of an image file and an optional channels argument specifying which channels of the image should be used

dilation image

Datasets are a critical part of any machine learning project, and they can be created in a variety of ways. In this blog post, we’ll show you how to create your own custom dataset in TensorFlow.

First, let’s start by creating a new TensorFlow session. We’ll use the tf.data module to create a simple data set.

Next, we’ll need to import the tf.data module and create our data set. We’ll use the tf.data.TFRecord class to create our data set.

TFRecord objects represent collections of data points. We can create a TFRecord object using the tf.data.TFRecord() constructor. The constructor takes two arguments: the name of the dataset and the number of items in the dataset. We can also use thetf.data.TFRecord() function to create an empty TFRecord object.

Next, we’ll need to load our data set into TensorFlow. We can do this by calling the tf.data.load_tensor() function on our TFRecord object. This function takes two arguments: the name of the file containing our data and the type of file format that we want to load our data into TensorFlow as ( TFrecord or NTFS ).

Now that our data is loaded into TensorFlow, we can start training our model using TensorFlow’s linear regression module, tf.linear(). The linear regression module

css image styling

If you want to dive into data science and machine learning, you’ll need to be comfortable loading and manipulating datasets. In this tutorial, we’ll show you how to create your own custom dataset in TensorFlow.

To get started, we first need a dataset. You can find plenty of free datasets online, or you can create your own using TensorFlow. For this tutorial, we’ll use the MNIST handwritten digits dataset.

Next, we need to load the dataset into TensorFlow. We can do this using the tf.load_dataset() function. After we’ve loaded the data, we can start working on it.

The first step is to convert the data from a NumPy array into a TensorFlow tensor. We can do this using the tf.data.convert_to_tensor() function. This function takes two arguments: the data object and a desired column type. In our case, we want to convert the NumPy array into a TensorFlow tensor representing each handwritten digit in the dataset.

After we’ve converted the data, we can start working on it using the tf.keras.models module. This module contains a variety of modules that help us work with machine learning models. In our case, we want to use the tf.keras.layers module to build a neural network model that recognizes handwritten digits from images.